Definition

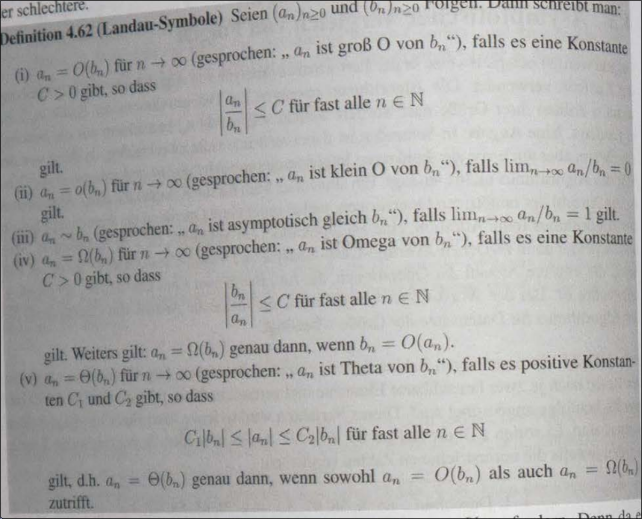

Big O Notation

The Big O notation is a notation for analysing the asymptotic complexity of a program or algorithm.

The notation is used to describe the lower and upper bounds of the runtime of a program for an input size :

where is the lower bound, is the upper bound and is the smallest input size for which the runtime is not negligible.

To simplify the notation, we can express the runtime in terms of and as follows:

which is sometimes also expressed using the notation , which must not be interpreted as equality.

If the upper bound is equal to the lower bound , then we can say that:

Schematic Foundation

Boundedness

where is the lower bound, is the runtime and is the upper bound.

Neglect Constant Factors

We are allowed to add a constant factor to the lower bound and a constant factor to the upper bound to constraint the runtime .

Neglect Small Input Sizes

We can specify a minimum input size for which (below) the runtime is not negligible.

Properties

Additivity

If and , then it holds that , where

Dominance

where , , .