machine-learning transformers attention

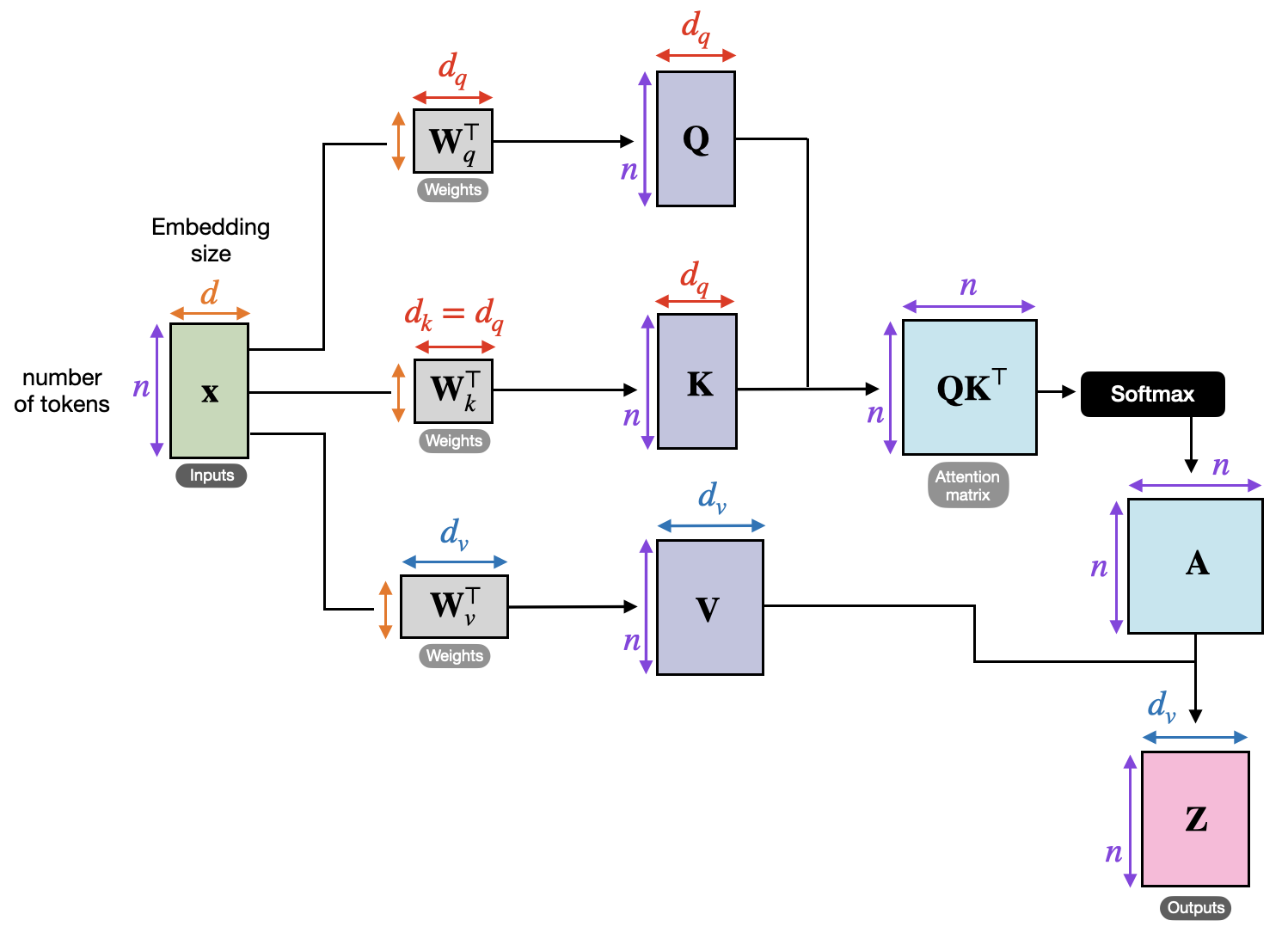

Self-Attention

Self-attention, sometimes called intra-attention is an attention mechanism relating different positions of a single sequence in order to compute a representation of the sequence. 1

It is a more specific form of cross-attention where .

Visualisation

Taken from 2.